Taking longer to load than usual. Thanks for your patience.

Couldn't load the data. Please reload the page.

Taking longer to load than usual. Thanks for your patience.

Couldn't load the data. Please reload the page.

This was an experiment to see whether I could use AI to refine a roughly made render.

The goal was to complete better-quality artwork more quickly by leveraging AI.

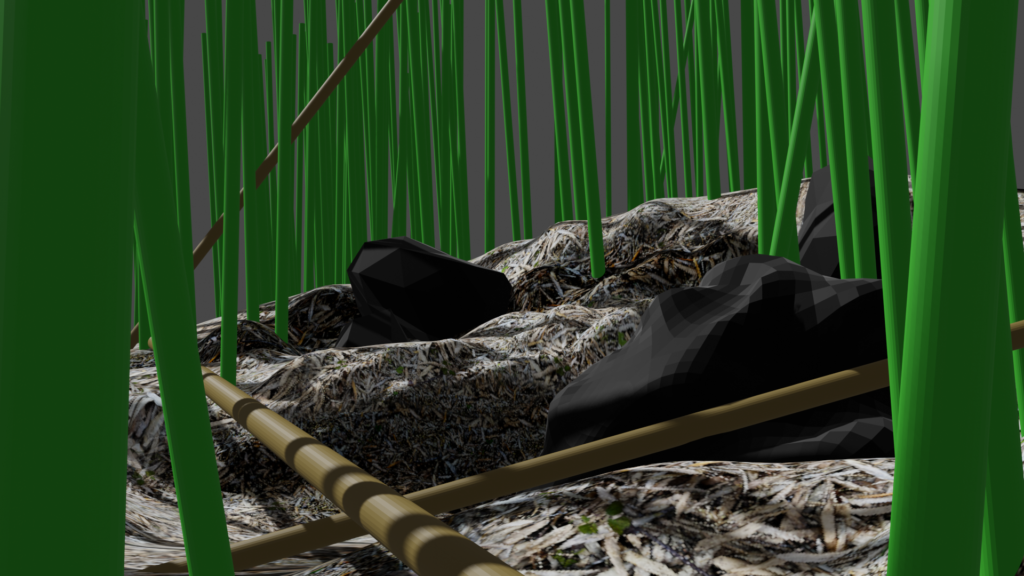

I created this rough render in Blender and fed it into the AI for refinement.

The scene is inspired by a bamboo forest — I colored the elements to make it easier for the AI to understand, such as tinting dried bamboo brown. The following is the processed result.

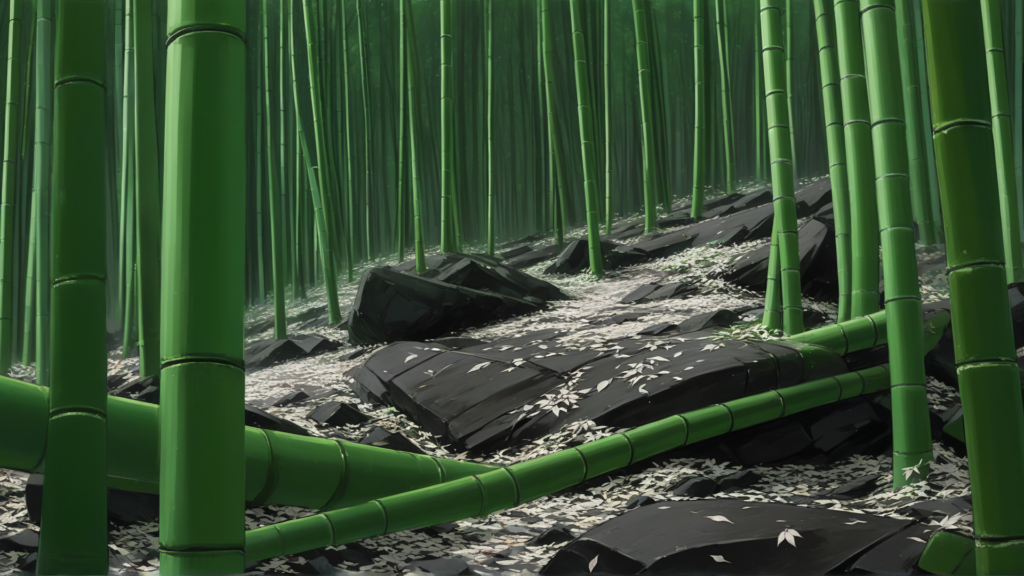

The quality of the image is not bad, but the outcome differed from what I originally intended.

I tried several variations by tweaking the prompts.

Personally, I wanted to depict fallen bamboo, but it didn’t come out as expected.

Occasionally, the AI would generate large rocks that I never intended, so I’ll need to explore ways to use masking and finer adjustments.

As an experiment in AI-based image generation using ComfyUI, the results were fairly good.

However, I noticed that while AI tends to produce something that “looks nice,” the results can be too vague or miss the intended concept.

For use in actual projects, I’ll need a way to exercise more precise control over details.